The Analog Neural Network

I. Introduction

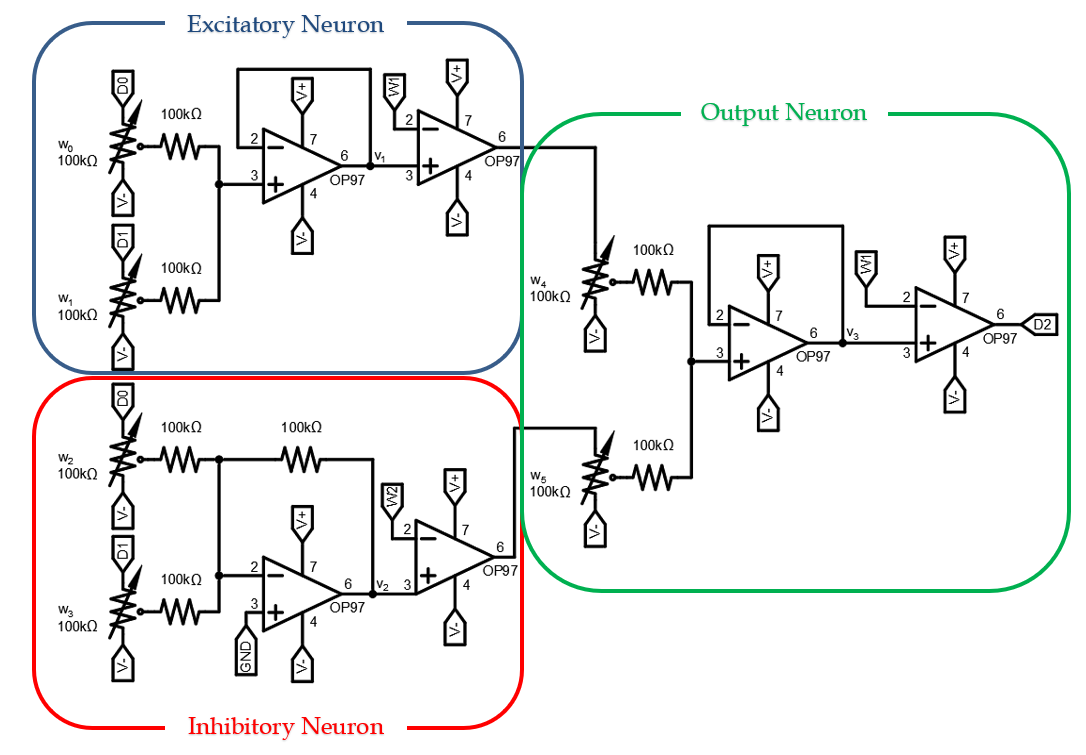

Figure 1. The Biological Neuron

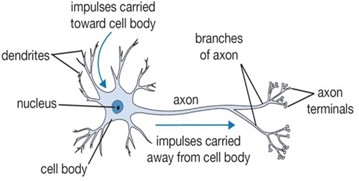

Figure 2. The Artificial Neuron

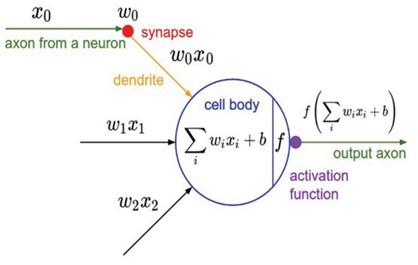

Figure 3. Artificial Neural Network

II. The Analog Neuron

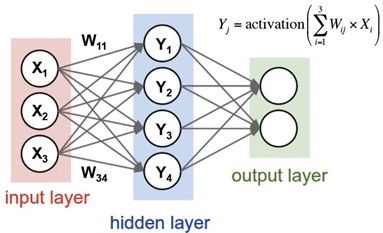

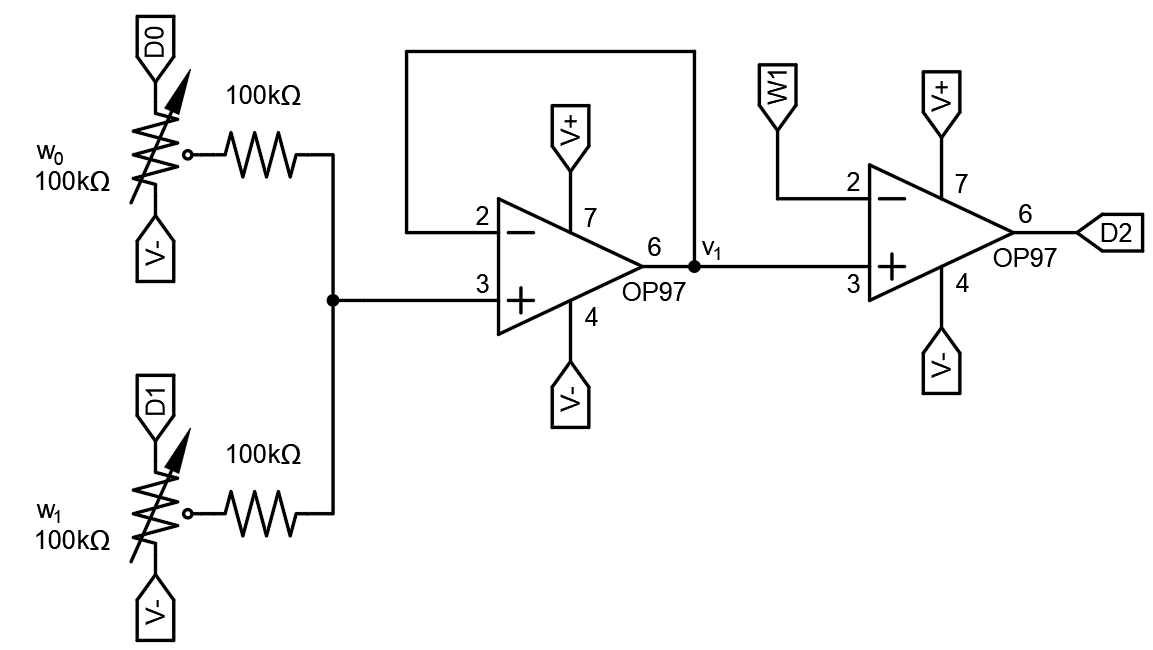

Figure 4. Analog Neuron

Our goal is to train the analog neuron to realize the logical AND function. This will require us to toggle the digital output and adjust the weights manually. We can also analyze the resistive portion of the circuit (summing amplifier) by using our circuit analysis toolbox: superposition, resistive voltage dividers, KVL, and KCL. Other variables that we can exercise are the weights, threshold, and supply voltages. The training procedure for this circuit is such:

- Toggle DIO 0 and DIO 1 and observe how the circuit's output changes.

- For a given set of inputs, try changing the weights one at a time until the output is correct. Then

change the inputs and adjust the weights again, until the output is correct.

- In general, if changing an input seems to contribute to the incorrect answer, try decreasing that

input’s weight. Conversely, if an input seems to contribute to the correct answer, try increasing that

input’s weight.

- Repeat these steps until the circuit produces the logical AND function.

III. The Analog Neural Network

Note the different architectures used for the excitatory vs. inhibitory neuron. While similar to the excitatory neuron that has been discussed thus far, the inhibitory neuron’s inverting configuration consists of a weighted voltage-summing inverting-amplifier followed by a voltage comparator for activation. The voltage at the output of the summing amplifier is given by the relationship:

v2=w2 D0+w3

D1